A Visibility Intelligence breakdown of how the 1903 Wright Flyer exposed the mechanics of Machine Trust and why AI systems can’t cite what you can’t prove.

Click to Expand

1. Audio

2. Definition

3. Video

8. Framework

9. Action Steps

10. FAQs

11. Call to Action

12. Free Training

13. Signature

Definition

Machine Trust is the level of reliability AI systems assign to an entity based on structured proof signals, consistent documentation, authoritative citations, cross-platform verification, and semantic stability that allows AI to classify, retrieve, and recommend with confidence.

Analogy Quote — Curtiss Witt

“You didn’t fly if no one can prove you did.”

Historical Story

December 17, 1903. Kitty Hawk, North Carolina. Cold wind. Empty beach. Two bicycle mechanics from Dayton, Ohio, stood beside a fragile wooden aircraft.

Orville Wright climbed into the pilot position. Wilbur stood at the wing tip, steadying the craft.

The engine roared. The propellers spun.

At 10:35 a.m., the Wright Flyer lifted off the ground.

Twelve seconds. 120 feet. The first powered, controlled flight in human history.

But here’s what most people don’t know: that flight almost didn’t matter.

Because dozens of inventors claimed they flew first. Samuel Langley had government funding and institutional backing. Gustave Whitehead had newspaper articles. Alberto Santos-Dumont had public demonstrations in Paris.

The Wright Brothers had documentation.

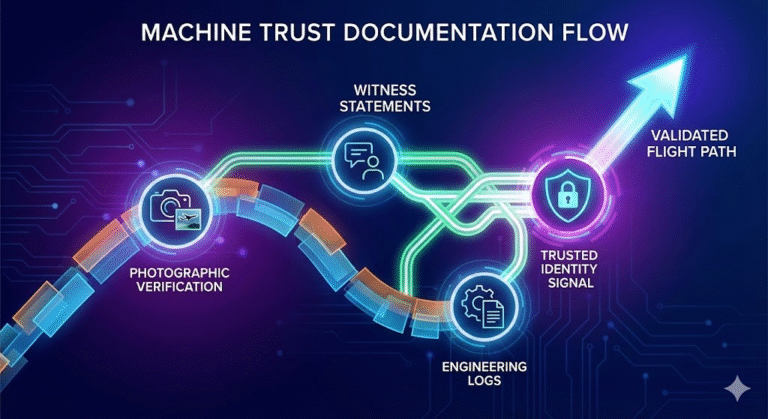

Five witnesses. A photograph. Detailed flight logs. Engineering notebooks. Patent applications. Telegrams sent immediately after the flight.

They didn’t just fly. They proved they flew.

And that proof—structured, verifiable, and authoritative—is why history remembers the Wright Brothers and forgets the others.

Discovery without documentation is rumor. Documentation is trust.

Our Connection

The Wright Brothers didn’t just invent flight—they invented the modern standard for Machine Trust.

They understood something most inventors didn’t: achievement without evidence is invisible.

You can be first. You can be revolutionary. You can be groundbreaking. But if you can’t prove it—if you can’t structure it, document it, verify it—no one will believe you. No one will cite you. No one will remember you.

Today, businesses face the same challenge with AI visibility.

You can have the best methodology. The most innovative approach. The clearest expertise. But if AI systems can’t verify your claims—if you lack schema, citations, authoritative signals, consistent documentation—you don’t exist in the knowledge graph.

This is why Betweener Engineering™—a new discipline created by The Black Friday Agency—prioritizes Domain A (structural truth and proof) over Domain B (narrative and story).

AI systems don’t trust what you say. They trust what you can prove.

Modern Explanation

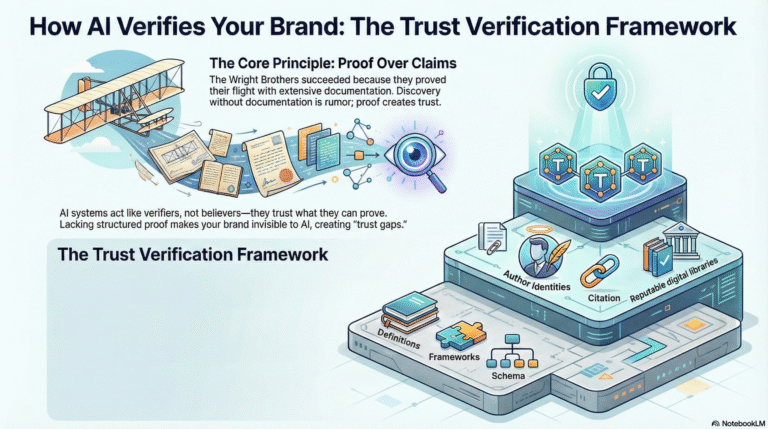

What makes AI trust a brand?

AI systems are not believers. They are verifiers.

When someone asks ChatGPT, Claude, or Gemini a question, the AI doesn’t just search for content—it searches for proof.

It looks for:

- Structured documentation (schema, definitions, frameworks)

- Authoritative signals (citations, cross-references, author identity)

- Consistency (same terminology across platforms)

- Verification loops (multiple sources confirming the same truth)

- Entity clarity (machine-readable identity)

Most businesses fail Answer Engine Optimization (AEO) and Generative Engine Optimization (GEO) because they treat visibility like storytelling. They focus on narrative. They optimize for creativity.

But AI doesn’t care about creativity. It cares about proof.

This is why Domain A—the structural, verifiable truth of your business—creates Machine Trust. AI systems trust what they can parse, verify, and cite.

When you lack:

- Schema

- Definitions

- Frameworks

- Citations

- Author identity

- Cross-platform coherence

…you create trust gaps. And trust gaps trigger invisibility.

The Wright Brothers understood this in 1903. They didn’t just fly—they built a Proof Loop. They documented. They verified. They structured their evidence so future systems (historians, institutions, encyclopedias) could cite them with confidence.

When you apply The TBFA 8-Step Betweener OS, you engineer the same proof architecture for AI systems. You move from claim to verification. From narrative to structure. From invisible to cited.

This is how you build Category-of-One Identity. This is how you achieve Semantic Endurance.

Framework: The Trust Verification Framework

This is how you engineer Machine Trust—the ability to be verified, classified, and cited by AI systems permanently.

Layer 1: Documentation

AI systems can’t trust what they can’t verify. You must document your expertise through structured proof signals: schema, definitions, frameworks, AEO-optimized content, and named methodologies. Build FAQ pages. Create glossary terms. Publish case studies. This is Domain A—the verifiable truth AI can parse and trust.

Layer 2: Authority

Documentation without authority is noise. Authority comes from citations, author identity, cross-platform presence, and institutional signals. Publish under consistent author names. Build schema for people and organizations. Get cited by authoritative sources. Link internally. This is how you create Fusion Nodes—unified identity signals AI can’t ignore.

Layer 3: Coherence

Authority without coherence creates confusion. Coherence means using the same terminology, frameworks, and definitions across all platforms. AI memory stabilizes when it sees consistency. Publish the same language on your website, LinkedIn, podcasts, YouTube, and articles. This is how you achieve Category-of-One Identity and Semantic Endurance.

Action Steps

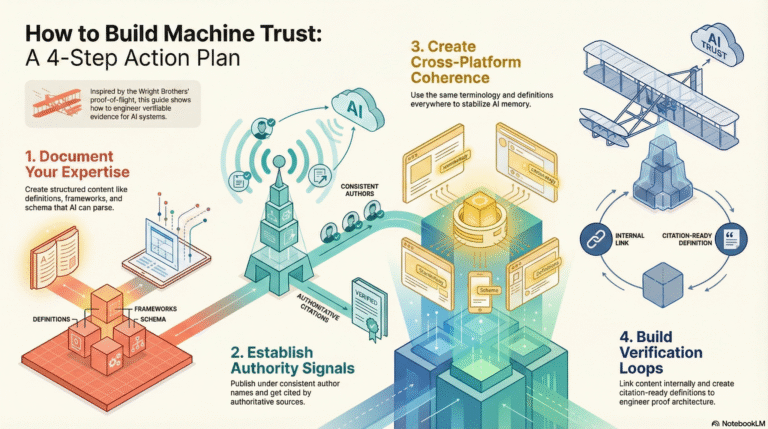

Step 1: Document Your Expertise

Build AEO-optimized definitions. Create frameworks. Name your methodology. Publish structured content AI can parse. Deploy schema on your website. This is your Domain A foundation—the proof layer AI systems require.

Step 2: Establish Authority Signals

Publish under consistent author names. Build schema for people and organizations. Get cited by authoritative sources. Add internal links. Use the Identity Simulator to audit how AI currently perceives your authority.

Step 3: Create Cross-Platform Coherence

Use the same terminology everywhere. Publish the same definitions on your website, LinkedIn, podcasts, YouTube, and articles. AI memory stabilizes through consistency. Consistency creates Machine Trust.

Step 4: Build Verification Loops

Link your content internally. Reference your own frameworks. Create citation-ready definitions. Apply The TBFA 8-Step Betweener OS to engineer proof architecture that AI systems can verify, trust, and cite permanently.

FAQs

What makes AI trust a brand?

AI systems trust brands that provide structured proof signals: schema, definitions, frameworks, authoritative citations, cross-platform coherence, and consistent documentation. Trust comes from verification, not narrative. AI can only cite what it can verify.

What is Machine Trust?

Machine Trust is the level of reliability AI systems assign to an entity based on structured proof signals, consistent documentation, authoritative citations, cross-platform verification, and semantic stability that allows AI to classify, retrieve, and recommend with confidence.

Why does transparency strengthen machine trust?

Transparency creates verifiability. When you document your methods, define your terms, cite your sources, and structure your expertise, AI systems can parse, verify, and trust your identity. Opacity creates ambiguity. Ambiguity destroys trust.

What is an authority schema stack?

An authority schema stack is a layered system of machine-readable proof signals: organization schema, person schema, service schema, HowTo schema, DefinedTerm schema, and CreativeWork schema. Together, they create a verified identity AI systems can trust and cite.

How does author identity matter for AI?

AI systems trust content more when it's attributed to verified, consistent author identities. Author schema tells AI: "This person wrote this. This person has expertise." Without author identity, content is anonymous—and anonymous content has lower Machine Trust.

What is a Proof Loop?

A Proof Loop is a verification architecture where your content references itself, links internally, cites authoritative sources, and reinforces the same definitions across platforms. Proof Loops create Semantic Endurance by stabilizing AI memory through structured coherence.

Why does domain clarity affect trust?

AI systems trust entities that are clearly defined within a specific domain. Ambiguous category placement creates confusion. Clear category placement creates Category-of-One Identity—a signal AI can classify, trust, and cite without hesitation.

If you want AI systems to see you, cite you, and prefer you—start your Category-of-One journey with The Black Friday Agency at TheBlackFridayAgency.com.

Free Training

Free Training: TheBlackFridayAgency.com/Training.

Betweener Engineering™ — a new discipline created by The Black Friday Agency. Explore the discipline: BetweenerEngineering.com