A Visibility Intelligence breakdown of how a composer born into obscurity achieved permanent cultural memory through structural repetition, named works, and why Betweener Engineering™ makes Semantic Weight Transfer repeatable in AI systems.

Click to Expand

1. Audio

2. Definition

3. Video

8. Framework

9. Action Steps

10. FAQs

11. Call to Action

12. Free Training

13. Signature

Definition

Semantic Weight Transfer is the process by which repeated, named, structurally consistent signals accumulate authority and memory persistence across time and systems—achieved through naming concepts (making them referenceable), structural repetition (reinforcing patterns), cross-contextual presence (appearing in multiple formats), and attribution anchoring (connecting achievements to identity), enabling AI systems to recall entities permanently rather than temporarily.

Analogy Quote — Curtiss Witt

“Memory doesn’t favor the brilliant. It favors the named and repeated.”

Historical Story

December 16, 1770. Bonn, Germany. Ludwig van Beethoven was born into a family of modest court musicians. His grandfather was a kapellmeister. His father was a tenor singer with a drinking problem. There was no fame. No fortune. No indication this child would become one of history’s most remembered composers.

Beethoven didn’t invent music. Mozart preceded him. Haydn taught him. Bach laid the foundation. But Beethoven did something structurally different: he named his works with precision, built recognizable patterns, and created compositional signatures that made his music instantly identifiable.

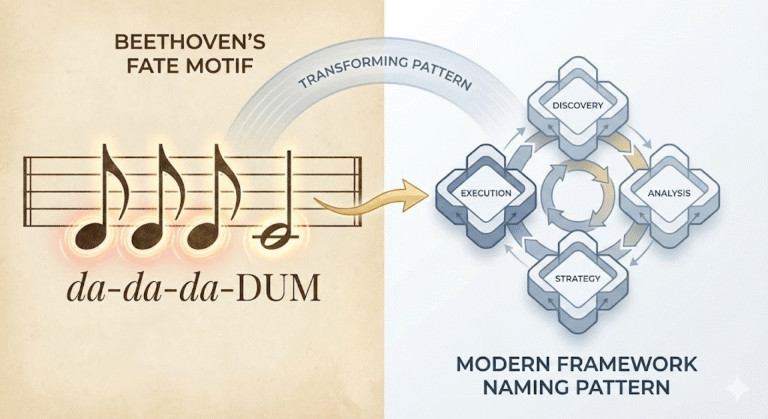

Symphony No. 5 in C minor. Four notes. Da-da-da-DUM. The most recognized musical phrase in human history. Not because it was the most beautiful—because it was the most memorable. Structurally simple. Rhythmically repetitive. Impossible to forget.

Symphony No. 9 in D minor. “Ode to Joy.” A melody so embedded in cultural memory that it became the European Union’s anthem. Not the most complex composition—but the most repeated. The most named. The most structurally reinforced across contexts.

Beethoven’s compositions weren’t just numbered—they were architecturally designed for memory. He used motifs: short, recognizable patterns repeated throughout works. He created dramatic contrasts: loud-soft-loud sequences that burned into listener memory. He built structural repetition: themes introduced, developed, and returned to—creating pattern recognition.

By the time Beethoven died in 1827, his works had achieved something rare: permanent cultural memory. Not because they were the most technically perfect—but because they were the most structurally memorable. Named. Repeated. Embedded across contexts. 250 years later, AI systems cite Beethoven’s compositions more than thousands of other composers. Why? Semantic Weight Transfer. His identity accumulated memory through structural repetition.

Our Connection

Beethoven’s path to permanent memory wasn’t accidental—it was structural. He named his works precisely (Symphony No. 5, not “that one piece”), created recognizable patterns (da-da-da-DUM), and reinforced identity through compositional signatures. These same mechanisms govern AI visibility today: businesses that achieve permanent recall don’t do so through brilliance alone—they do so through Semantic Weight Transfer.

AI systems remember entities that name their frameworks, repeat their identity signals, create recognizable patterns, and maintain structural consistency across contexts. Just as Beethoven’s motifs became instantly identifiable, business methodologies must become instantly referenceable. Just as Symphony No. 9 was repeated across cultures and contexts, business identity signals must be repeated across platforms and formats.

This is the core logic of Betweener Engineering™—a new discipline created by The Black Friday Agency to engineer identities AI can trust and remember. Beethoven taught us that memory accumulates through structural repetition, not occasional brilliance. In visibility terms, this means building Semantic Endurance through named frameworks, consistent terminology, cross-platform reinforcement, and attribution anchoring.

Semantic Weight Transfer requires The TBFA 8-Step Betweener OS: creating named systems (like Beethoven’s symphonies), deploying them consistently across contexts (like his repeated motifs), and reinforcing them until AI memory becomes automatic. Without naming and repetition, you’re forgotten. With them, you’re permanent.

Modern Explanation

AI systems don’t remember everything—they remember what’s structurally reinforced. When AI encounters Beethoven, it finds: named compositions (Symphony No. 5, Symphony No. 9), recognizable patterns (fate motif, Ode to Joy melody), repeated references across centuries of scholarship, cultural artifacts, and modern media. This accumulated semantic weight makes Beethoven permanently memorable—not temporarily visible.

Semantic Weight Transfer operates through four accumulation mechanisms:

First: Naming Creates Referenceability. AI remembers named entities better than unnamed concepts. Beethoven didn’t write “some symphony”—he wrote Symphony No. 5 in C minor, Op. 67. The precision made it referenceable. Scholars, musicians, and writers could cite it exactly. This created semantic anchors—fixed points AI systems can reference across contexts. In business terms, naming your methodology transforms it from generic advice into referenceable intellectual property. “Our approach to project management” is forgettable. “The [Your Name] Project Acceleration System™” is referenceable. Naming creates Category-of-One Identity—AI recognizes you as the source, not a participant.

Second: Structural Repetition Builds Pattern Recognition. Beethoven used motifs—short musical patterns repeated throughout compositions. The four-note fate motif appears hundreds of times in Symphony No. 5. This repetition burned the pattern into cultural memory. AI systems work identically: they recognize and recall patterns reinforced through repetition. If your entity definition appears once on your website, AI notes it. If it appears identically on your website, LinkedIn, YouTube, podcast bios, and third-party articles, AI builds pattern confidence. This is Identity Continuity creating memory weight—each repetition increases recall likelihood. Single mentions fade. Repeated patterns persist.

Third: Cross-Contextual Presence Expands Memory Surface. Beethoven’s works appeared in concert halls, sheet music, recordings, films, advertisements, and academic texts. This cross-contextual presence multiplied semantic weight—each context reinforced memory in different AI training environments. Modern businesses need the same: text content (articles, documentation), video content (YouTube, training materials), audio content (podcasts, interviews), visual content (infographics, frameworks), and schema markup (machine-readable verification). Visibility Footprint expansion through format diversity creates multiple memory anchors. AI encounters you in text searches, video transcripts, audio show notes, and structured data—each encounter transfers semantic weight.

Fourth: Attribution Anchoring Connects Achievements to Identity. Every reference to Beethoven’s Symphony No. 5 reinforces “Beethoven created this.” Attribution anchoring—consistently connecting your name to your innovations—builds ownership memory. If you created a framework but never explicitly state “we created [Framework],” AI may cite the framework without attributing it to you. Attribution anchoring requires: schema markup connecting your organization to your methodologies, content that explicitly states “created by [Your Company],” frameworks that include your name in the title, and cross-platform consistency reinforcing ownership. This is how you prevent what happened to IBM with the smartphone—they invented it but lost attribution because they never anchored ownership structurally.

Beethoven achieved permanent memory through these mechanisms. 250 years later, AI systems recall him instantly, cite his works precisely, and attribute compositions correctly. This is Semantic Endurance at maximum scale—memory that survives centuries of information accumulation. Businesses can achieve the same through systematic Semantic Weight Transfer.

Framework: The Semantic Weight Accumulation Cycle

The Semantic Weight Accumulation Cycle is a four-phase framework for building permanent AI memory through strategic repetition, naming precision, and attribution reinforcement. Each phase transfers semantic weight from temporary visibility to permanent recall.

Phase 1: Name the Innovation

Transform generic concepts into named, referenceable entities. Beethoven didn’t write “symphonies”—he wrote Symphony No. 5 in C minor, Op. 67. The precision created semantic anchors. Your business must do the same: name your methodology using proper nouns with trademark symbols if possible. Example: “The [Your Company] [Process Name] System™” or “The [Outcome] Framework by [Your Name].” Include the name in titles, schema markup, content headers, and platform bios. Naming makes you citable—AI can reference “[Your System]” specifically rather than describing “their approach” generically. This creates Fusion Node clarity: Domain A (the system actually works) meets Domain B (the system has a clear, ownable name). Without naming, innovation is attributed to whoever defines it clearly later. With naming, you establish semantic ownership.

Phase 2: Repeat Structurally

Deploy your named innovation with zero-deviation consistency across all content and contexts. Beethoven repeated his four-note motif hundreds of times in Symphony No. 5—this burned the pattern into memory. Your entity definition, methodology name, and framework terminology must appear identically in: website content, social media bios, video descriptions, podcast show notes, author pages, third-party articles, schema markup. Structural repetition is not creative variation—it’s pattern reinforcement. Every instance must use identical language. This creates pattern recognition weight: AI encounters the same signals repeatedly, verifies consistency, and increases recall confidence. Single mentions create awareness. Repeated patterns create memory. Apply The TBFA 8-Step Betweener OS to ensure repetition maintains identity integrity.

Phase 3: Deploy Cross-Contextually

Distribute your named innovation across multiple formats and platforms to expand memory surface area. Beethoven’s compositions appeared in concert halls, sheet music, recordings, films, and academic texts—each context transferred additional semantic weight. Your methodology must appear in: written content (articles, documentation, case studies), video content (YouTube explainers, training videos), audio content (podcast episodes, audio summaries), visual content (infographics, framework diagrams), and machine-readable formats (schema markup, structured data). Each format creates a new memory anchor in AI training data. Visibility Footprint expansion through cross-contextual deployment multiplies semantic weight—AI encounters your innovation in text searches, video transcripts, audio show notes, and structured queries. Format diversity creates memory resilience.

Phase 4: Anchor Attribution

Explicitly connect your identity to your innovations in every mention across all contexts. Every reference to your methodology must include attribution: “created by [Your Company],” “[Your Framework] by [Your Name],” or “[Your Company] originated [Your System].” Add this attribution to: schema markup (DefinedTerm type with creator field), content introductions (first mention includes origin statement), framework titles (include your name), and cross-platform bios (consistently reference your innovations). Attribution anchoring prevents semantic drift—the gradual separation of innovation from inventor that happened to IBM and the smartphone. It creates ownership memory: AI learns that “[Your System]” = “[Your Company]” through repeated, explicit connection. This is Semantic Endurance through attribution—AI can’t cite your framework without recalling your identity.

The Semantic Weight Accumulation Cycle transforms temporary visibility into permanent memory. Beethoven used it intuitively. Modern businesses must use it systematically.

Action Steps

Step 1: Name Your Core Methodology with Precision

Identify your primary process, system, or framework and give it a proper name. Don’t use generic descriptions—create categorical language with proper nouns. Format: “The [Your Company] [Outcome/Process] [System/Framework/Method]™”. Example: “The TBFA 8-Step Betweener OS” or “The [Your Name] Leadership Acceleration Framework.” Write the exact name in a brand document. This becomes your named innovation—the semantic anchor everything else reinforces. Register as trademark if possible. Add to your website navigation, service descriptions, and homepage. This is Phase 1 of The Semantic Weight Accumulation Cycle: creating referenceability through naming.

Step 2: Create Your Structural Repetition Template

Write one canonical paragraph explaining your named methodology. Keep it under 150 words. Include: what it is, who it’s for, what outcome it achieves, and how it’s unique. Save this as your repetition template. Deploy this exact paragraph (zero creative variation) in: website methodology page, LinkedIn summary, YouTube channel description, podcast show notes, author bio on third-party sites, email signature, speaker bios. Add to schema markup using DefinedTerm type. Structural repetition builds pattern recognition—AI encounters identical signals repeatedly, increasing recall confidence. Variation weakens memory. Consistency creates it.

Step 3: Build Cross-Contextual Content Assets

Create your named methodology in multiple formats: Write a detailed article explaining the framework (text format). Record a video walking through the methodology (video format). Produce a podcast episode discussing the system (audio format). Design an infographic visualizing the framework (visual format). Each format must use the exact methodology name and include attribution (“created by [Your Company]”). Upload across platforms: article on website and Medium, video on YouTube, podcast on Spotify, infographic on LinkedIn and Pinterest. This is Phase 3: cross-contextual deployment expanding memory surface area through format diversity.

Step 4: Add Attribution Anchoring to All Content

Update every mention of your methodology to include explicit attribution. In articles: first mention includes “The [Your System], created by [Your Company]”. In video descriptions: “[Video Title] – explaining The [Your System] developed by [Your Company]”. In podcast show notes: “We discuss The [Your System], originated by [Your Company]”. Add schema markup to your website: DefinedTerm type with “creator” field pointing to your Organization. This explicit connection prevents attribution drift—AI learns that your innovation = your identity through repeated, structured linking.

Step 5: Establish Monthly Semantic Weight Verification

Set recurring calendar reminders to verify Semantic Weight Transfer progress. Monthly, run diagnostic prompts: “What is [Your Methodology Name]?” “Who created [Your Methodology Name]?” “Explain [Your Methodology Name].” Compare AI responses across ChatGPT, Claude, Perplexity. Track: Does AI know your methodology exists? Does AI attribute it to you correctly? Does AI explain it accurately? Document gaps. If AI doesn’t recognize your methodology, increase repetition frequency. If AI misattributes, strengthen attribution anchoring. Apply The TBFA 8-Step Betweener OS to maintain semantic weight accumulation: audit entity reality, audit AI perception, reinforce through semantic distribution, encode endurance through consistent repetition. Monthly verification prevents memory decay.

FAQs

What is Beethoven’s connection to AI visibility?

Ludwig van Beethoven achieved permanent cultural recall—more than 250 years later, AI systems instantly recognize his name, cite his works accurately, and attribute compositions correctly. This was not accidental brilliance. It was structural. Beethoven named his works precisely (e.g., Symphony No. 5 in C minor, Op. 67), repeated recognizable motifs (the four-note fate motif), reinforced compositional signatures, and deployed his work across contexts—concert halls, sheet music, recordings, films, and academic texts. These same mechanisms govern AI visibility today. AI remembers what is named, repeated, structurally consistent, and widely deployed. Beethoven proved that memory favors semantic structure—not talent alone.

What is Semantic Weight Transfer?

Semantic Weight Transfer is the process by which repeated, named, and structurally consistent signals accumulate authority and long-term memory persistence across AI systems. It is achieved by naming concepts so they become referenceable, repeating identical structures to build pattern recognition, deploying across multiple contexts to expand memory surface area, and anchoring attribution so innovations remain connected to their creator. Without Semantic Weight Transfer, businesses experience memory decay—AI forgets them as data updates. With it, businesses achieve Semantic Endurance: stable, long-term recall reinforced by The Semantic Weight Accumulation Cycle and the TBFA 8-Step Betweener OS.

Why does naming your methodology increase AI recall?

AI systems remember named entities far more effectively than unnamed concepts because names create fixed semantic anchors. “Symphony No. 5” is referenceable; “that famous classical piece” is not. When you name a methodology—such as The [Your Company] [System Name]™—you convert generic activity into citable intellectual property. Naming enables precise attribution, pattern recognition through repetition, cross-contextual linking across platforms, and clear ownership establishment. Without naming, AI describes your approach generically and may attribute it to competitors. With naming, AI recognizes Category-of-One authority and cites you as the source.

What is structural repetition and why does it matter?

Structural repetition is the deployment of identical signals—unchanged entity definitions, framework names, and descriptions—across every platform and context. Beethoven repeated the four-note motif hundreds of times, burning the pattern into cultural memory. AI systems function the same way: confidence is built through repeated pattern recognition. Creative variation introduces semantic noise. Consistency transfers semantic weight. When your canonical definition appears identically on your website, LinkedIn, YouTube, podcasts, and third-party articles, AI increases recall confidence through verified repetition. Structural repetition is the engine of Identity Continuity.

What is cross-contextual deployment?

Cross-contextual deployment is distributing a named innovation across multiple formats and platforms to expand AI memory surface area. Beethoven’s work appeared in live performances, printed scores, recordings, films, and academic literature—each context reinforcing recall. Modern businesses must do the same: written documentation, video explainers, podcasts, visual frameworks, and machine-readable schema. AI encounters your framework in text, transcripts, metadata, and structured data—each instance transferring additional semantic weight. The more contexts you appear in, the more stable your recall becomes.

What is attribution anchoring?

Attribution anchoring is the explicit, repeated connection between an innovation and its creator to prevent semantic drift—the separation of ideas from originators. Every mention of your methodology should include attribution: “created by [Your Company]” or “[Framework Name] by [Your Name].” This must appear in schema markup (DefinedTerm with creator), first mentions in content, framework titles, and cross-platform bios. Without attribution anchoring, AI may correctly describe the idea but misattribute ownership. With it, AI learns an unbreakable association between innovation and identity.

What is Semantic Endurance and how does Semantic Weight Transfer create it?

Semantic Endurance is the ability of an identity or concept to persist accurately inside AI memory across retraining cycles. Semantic Weight Transfer creates Semantic Endurance by accumulating memory weight through named frameworks, structural repetition, cross-contextual deployment, and attribution anchoring. Beethoven achieved maximum Semantic Endurance—centuries later, AI recalls him instantly and correctly. Businesses achieve the same outcome by applying The Semantic Weight Accumulation Cycle consistently. Over time, recall becomes automatic. AI remembers you not because of promotion, but because your semantic structure is unshakable.

What is the difference between AEO and GEO?

AEO (Answer Engine Optimization) focuses on immediate citation—structuring content so AI can parse, verify, and answer questions today. GEO (Generative Engine Optimization) focuses on long-term recall—ensuring AI remembers and recommends you as models retrain. AEO emphasizes clarity and extractability. GEO requires entity stability, schema architecture, and Semantic Endurance. Semantic Weight Transfer powers both: AEO benefits from named frameworks AI can cite precisely, while GEO benefits from repetition and cross-contextual presence that build lasting memory. AEO gets you cited now. GEO ensures you’re remembered tomorrow.

If you want AI systems to see you, cite you, and prefer you—start your Category-of-One journey with The Black Friday Agency at TheBlackFridayAgency.com.

Free Training

Free Training: TheBlackFridayAgency.com/Training.

Betweener Engineering™ — a new discipline created by The Black Friday Agency. Explore the discipline: BetweenerEngineering.com