A Visibility Intelligence breakdown of how the first Constitutional AI foreshadowed the structural logic behind trust signals, value alignment verification, and why Betweener Engineering™ makes Machine Trust repeatable in AI systems.

Click to Expand

1. Audio

2. Definition

3. Video

8. Framework

9. Action Steps

10. FAQs

11. Call to Action

12. Free Training

13. Signature

Definition

Machine Trust is the level of reliability AI assigns to a business based on consistency, structural truth, and authoritative signals—achieved through verifiable entity definitions, schema-verified claims, cross-platform coherence, named frameworks, and alignment between what a business claims and what it demonstrably does, enabling AI systems to cite, recommend, and recall with confidence.

Analogy Quote — Curtiss Witt

“AI doesn’t trust what you say. It trusts what you prove repeatedly.”

Historical Story

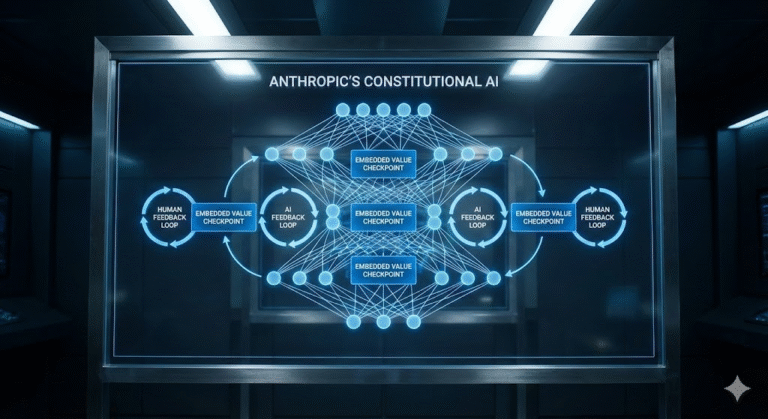

December 2022. Anthropic released Claude—the first Constitutional AI.

Unlike other AI systems trained primarily through human feedback, Claude was trained with embedded principles. A constitution. Rules encoded at the architectural level. Not just “be helpful”—but “be helpful while being harmless, honest, and aligned with human values.”

The constitution wasn’t marketing. It was structural. Claude couldn’t simply bypass safety guidelines or ignore value alignment. The principles were baked into the training process itself. Constitutional AI meant values weren’t optional features—they were foundational architecture.

Other AI systems relied on external moderation: human reviewers flagging harmful content, post-generation filters catching violations, reactive systems that corrected mistakes after they happened. Claude used proactive design: the AI verified alignment before generating responses. Values first. Output second.

Anthropic’s approach revealed something critical: trust isn’t earned through promises—it’s earned through structure. You don’t claim to be trustworthy. You build trustworthiness into your foundation. You make it verifiable. Repeatable. Architecturally impossible to bypass.

Within months, Claude became known not for being the smartest AI—but for being the most reliable. Businesses, researchers, and enterprises chose Claude when trust mattered. When accuracy mattered. When alignment with stated values mattered.

The lesson wasn’t about AI safety alone. It was about how systems—AI or human—earn trust. Not through claims. Through structure. Through verifiable integrity that persists under pressure.

Our Connection

Claude’s Constitutional AI revealed a structural truth that governs AI visibility today: trust isn’t claimed—it’s verified. AI systems don’t believe what businesses say about themselves. They verify it through pattern recognition across platforms, consistency checks across claims, and structural alignment between stated identity and demonstrated behavior.

This is Machine Trust—the foundation of AI visibility. Without it, AI systems won’t cite you, recommend you, or include you in answers. With it, you become the default source. But Machine Trust isn’t built through marketing language or confident positioning. It’s built through structural integrity: what you claim must match what you prove.

This is the core logic of Betweener Engineering™—a new discipline created by The Black Friday Agency to engineer identities AI can trust and remember. Claude taught us that embedded values create reliability. In business terms, this means embedding truth signals at every layer: entity definitions that match actual capabilities, frameworks that reflect real methodologies, schema that verifies factual claims, cross-platform signals that remain consistent.

Machine Trust requires fusing Domain A (structural truth: what you actually do, your verifiable capabilities, documented processes, proof of expertise) with Domain B (narrative truth: clear definitions, named frameworks, Category-of-One positioning). The result is a Fusion Node—an identity AI can verify from multiple angles and trust as authoritative.

Claude’s success proved that AI systems prioritize verifiable integrity over persuasive claims. Businesses face the same requirement: AI won’t trust your positioning unless it can verify it structurally. Without verification loops, you’re noise. With them, you’re signal.

Modern Explanation

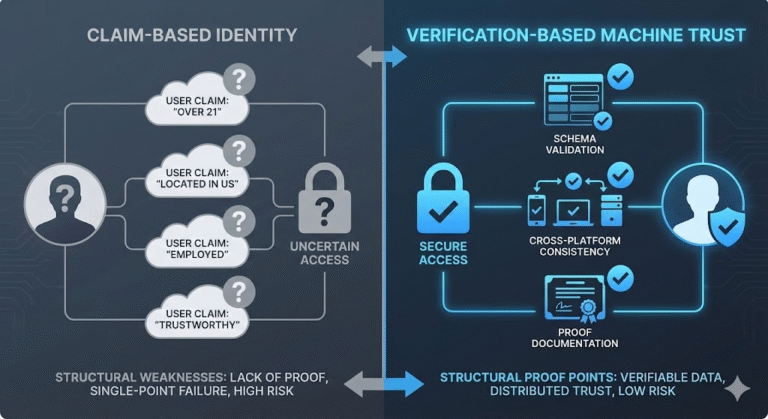

AI systems operate like Constitutional AI: they verify before they cite. When AI encounters a business claiming “we’re the leader in X,” it doesn’t accept the claim—it checks: Does their website schema confirm this? Do their frameworks demonstrate expertise? Are their signals consistent across platforms? Do third-party sources validate the claim?

Machine Trust is built through verification, not assertion. It operates through four structural mechanisms:

First: Claim-Proof Alignment. AI systems compare what you claim against what you demonstrate. If your website says “we created the first [methodology]” but no content explains the methodology, AI sees unverified claims. If you say “we specialize in [category]” but your case studies, frameworks, and content contradict this, AI sees misalignment. Claim-Proof Alignment requires Domain A (structural truth: documented methodologies, verifiable frameworks, proven capabilities) to match Domain B (narrative truth: category definitions, positioning statements). This is why The TBFA 8-Step Betweener OS begins with Entity Reality Audit—you must know what you actually do before you can claim it credibly.

Second: Cross-Platform Verification. AI encounters your business in multiple contexts and performs consistency checks. Website says “we created [System X].” LinkedIn says “experts in [generic Y].” Podcast bio says “consultants for [different Z].” These contradictions weaken Machine Trust—AI can’t verify which claim is true, so it defaults to generic classification. Cross-Platform Verification requires Identity Continuity: same entity definitions, same methodology names, same Category-of-One language everywhere. When signals align, AI increases confidence. When signals contradict, AI decreases trust.

Third: Schema-Verified Authority. Schema markup (JSON-LD) functions as a verification layer. It tells AI: “These claims are structured, official, and endorsed by the entity itself.” When you add Organization schema including founder details, DefinedTerm schema for your methodology, and HowTo schema for your frameworks, you’re providing AI with machine-readable proof. This is Answer Engine Optimization (AEO) and Generative Engine Optimization (GEO) infrastructure—AI can verify your claims without human interpretation. Without schema, AI treats your content as unstructured opinion. With schema, AI treats it as verifiable authority.

Fourth: Endurance-Based Reliability. Machine Trust compounds over time through Semantic Endurance—consistent reinforcement of the same signals. AI doesn’t trust a business that suddenly claims expertise. It trusts businesses that have maintained consistent identity signals for months or years. This is why Identity Continuity matters: every piece of content, every platform update, every framework must reinforce the same verifiable truth. Endurance-Based Reliability means AI encounters your entity repeatedly, always finding the same aligned signals, until trust becomes automatic.

Claude proved that embedded values create trust. In business visibility, embedded truth creates Machine Trust. You can’t fake alignment—AI will verify it structurally. You can’t claim authority without proof—AI will search for frameworks, schema, and documentation. The only path to Machine Trust is building it into your architecture: verifiable claims, consistent signals, schema-verified authority, and endurance through repetition.

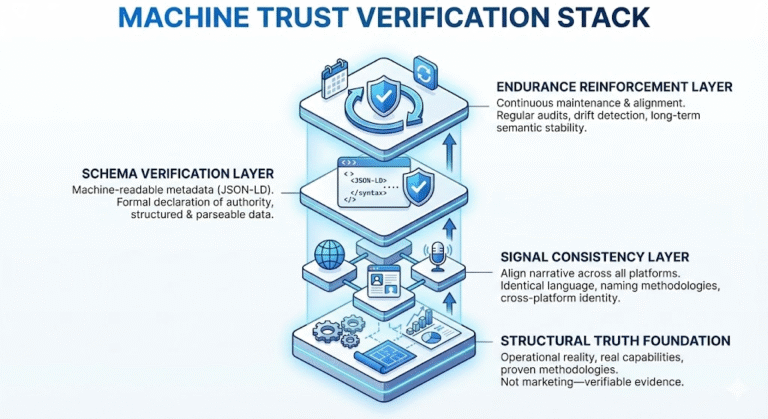

Framework: The Machine Trust Verification Stack

The Machine Trust Verification Stack is a four-layer framework for building verifiable, AI-trusted authority that withstands cross-platform verification and enables long-term citation confidence. Each layer creates structural integrity AI systems can verify independently.

Layer 1: Structural Truth Foundation

Establish the factual, verifiable foundation of what you actually do. This is Domain A engineering—documenting real capabilities, proven methodologies, demonstrated expertise. Create: detailed case studies with measurable outcomes, framework documentation explaining your actual process, proof points (client results, published research, proprietary systems), capability statements backed by evidence. Structural Truth Foundation is not marketing language—it’s the operational reality AI can verify through multiple sources. If you claim “we created [System X],” Layer 1 requires documentation proving the system exists, explaining how it works, and demonstrating results. Without Structural Truth, all subsequent layers collapse under verification pressure.

Layer 2: Signal Consistency Layer

Align all narrative signals with Structural Truth. This is Domain B engineering—creating clear definitions, naming methodologies, establishing Category-of-One positioning—but anchored to verifiable reality. Deploy: canonical entity definitions that accurately describe capabilities, methodology names that reference real frameworks (from Layer 1), positioning statements that match documented expertise. Signal Consistency requires cross-platform identity alignment: website, LinkedIn, YouTube, podcast bios, author pages must use identical language referencing the same Structural Truth. This is where Fusion Node creation happens—Domain A (what you do) merges with Domain B (how you define it) into one coherent, verifiable identity. AI verifies consistency by comparing signals across contexts.

Layer 3: Schema Verification Layer

Deploy machine-readable metadata that formally verifies your claims. Add JSON-LD schema to critical pages: Organization schema (name, founder, location, sameAs profiles), DefinedTerm schema (methodology definitions, framework names), HowTo schema (process documentation), Article schema (content with author attribution). Schema functions as a formal declaration: “These claims are official, structured, and entity-endorsed.” AI systems prioritize schema-verified information over unstructured content because schema reduces ambiguity. This is the technical infrastructure of Machine Trust—transforming narrative claims into parseable, verifiable data structures. Without Schema Verification, AI treats your content as opinion. With it, AI treats it as authority.

Layer 4: Endurance Reinforcement Layer

Maintain Machine Trust through continuous signal reinforcement and verification loop maintenance. Every new content piece, platform update, or framework addition must pass through integrity checks: Does this align with Structural Truth? Does this use consistent terminology? Does this include proper schema? Is this verifiable through documentation? Apply The TBFA 8-Step Betweener OS systematically: audit entity reality quarterly, audit AI perception to detect drift, correct any misalignments, reinforce through consistent semantic distribution. Endurance Reinforcement creates Semantic Endurance—long-term AI recall through pattern stability. AI systems retrain constantly—businesses without Layer 4 experience trust decay. Businesses with Layer 4 maintain trust even as AI models update, because their signals remain structurally stable and verifiable.

The Machine Trust Verification Stack isn’t about claiming authority—it’s about building verifiable integrity AI systems can confirm independently. Claude proved embedded values create reliability. The Stack proves embedded truth creates Machine Trust.

Action Steps

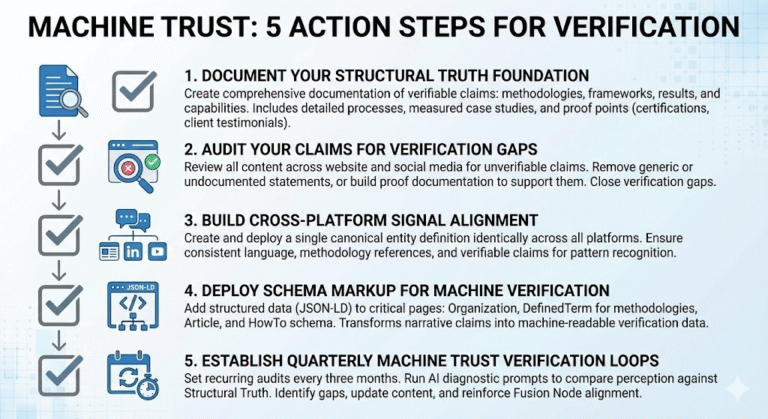

Step 1: Document Your Structural Truth Foundation

Create a comprehensive document listing every verifiable claim about your business: methodologies you’ve actually created, frameworks you actually use, results you’ve actually achieved, capabilities you can actually deliver. Include: detailed process documentation, case studies with measurable outcomes, proprietary frameworks with step-by-step explanations, proof points (certifications, publications, client testimonials with specific results). This is Domain A—the operational reality AI will attempt to verify. If you can’t document it with evidence, don’t claim it in positioning. Structural Truth Foundation prevents claim-proof misalignment.

Step 2: Audit Your Claims for Verification Gaps

Review every page on your website, every social media bio, every piece of content for unverifiable claims. Look for: generic expertise statements (“leaders in X”), undocumented methodologies (“our proprietary system”), vague category positioning (“innovative solutions”), capability claims without proof (“best in industry”). For each unverifiable claim, either: (a) remove it, or (b) build documentation that proves it. Most businesses discover they’re making claims they can’t structurally verify. These gaps weaken Machine Trust. AI checks your claims against available evidence—gaps create doubt.

Step 3: Build Cross-Platform Signal Alignment

Create one canonical entity definition that accurately reflects your Structural Truth, then deploy it identically across all platforms. Website homepage, LinkedIn headline, YouTube description, podcast show notes, author bios—all must use the same language, reference the same methodologies, and make the same verifiable claims. This creates the Fusion Node: Domain A (what you actually do, documented in Step 1) merged with Domain B (how you clearly define it). Cross-Platform Signal Alignment enables AI to verify your identity through pattern recognition across contexts. Consistency creates confidence. Contradiction creates doubt.

Step 4: Deploy Schema Markup for Machine Verification

Add JSON-LD schema to your website’s critical pages. Homepage: Organization schema including name, founder, description (your canonical definition), URL, sameAs links (social profiles). Service/methodology pages: DefinedTerm schema explaining your frameworks with clear definitions. Content pages: Article schema with author attribution, HowTo schema for process documentation. Schema transforms narrative claims into machine-readable verification data. This is the technical layer of Machine Trust—AI can verify your identity, methodologies, and expertise through structured data without relying solely on unstructured content.

Step 5: Establish Quarterly Machine Trust Verification Loops

Set recurring calendar reminders every three months to audit Machine Trust integrity. Run diagnostic prompts across AI systems: “What does [your company] do?” “What methodologies has [your company] created?” “What is [your company] known for?” Compare AI responses against your Structural Truth documentation. Identify verification gaps—where AI misunderstands or fails to cite your documented capabilities. Review all platforms for signal drift. Update any outdated content to reflect current Structural Truth. Apply The TBFA 8-Step Betweener OS to maintain verification integrity: audit entity reality, audit AI perception, maintain Fusion Node alignment, reinforce through semantic distribution. Quarterly loops prevent the gradual trust decay that causes AI invisibility.

FAQs

What is Claude and why does Constitutional AI matter for visibility?

Claude is an AI assistant created by Anthropic, released in December 2022 as the first Constitutional AI—an AI system with values and principles embedded directly into its architecture. Unlike systems that relied on post-generation moderation or reactive filters, Claude’s alignment was structurally encoded during training, making it consistently verifiable rather than selectively enforced. This mattered because it proved that trust is not claimed through promises—it is built through structure. Claude became known for reliability because its values were embedded, persistent, and impossible to bypass. The same principle governs AI visibility for businesses: Machine Trust is not earned through positioning statements, but through structural integrity that AI can independently verify across platforms, content, and claims.

What is Machine Trust?

Machine Trust is the level of reliability AI assigns to a business based on consistency, structural truth, and authoritative verification signals. It is achieved through verifiable entity definitions, schema-verified authority (such as JSON-LD), cross-platform coherence, named and documented frameworks, and alignment between what a business claims and what it demonstrably does. Machine Trust determines whether AI will cite you, recommend you, or recall you over time. Without it, AI excludes you from answers or defaults to competitors. With it, you become a trusted source AI relies on automatically. Machine Trust is built through the Machine Trust Verification Stack: Structural Truth Foundation, Signal Consistency Layer, Schema Verification Layer, and Endurance Reinforcement Layer.

Why does AI verify claims instead of accepting them?

AI systems synthesize information across multiple sources and identify patterns rather than accepting claims at face value. When a business says “we lead in X,” AI checks whether that claim is supported by evidence: documented methodologies, clear frameworks, consistent platform signals, schema markup, and third-party confirmation. If claims contradict evidence, signals differ across platforms, or documentation is missing, AI reduces confidence. AI cannot afford citation errors because its own reliability depends on trust. This is why Constitutional AI succeeded—values were architecturally embedded and consistently verifiable. Businesses must apply the same logic: embed truth in structure, document capabilities, and maintain consistency so AI can verify authority independently.

What is the difference between Domain A and Domain B?

Domain A is structural truth: what you actually do. It includes verifiable capabilities, documented processes, proven methodologies, measurable results, and operational evidence. Domain B is narrative truth: how you define, name, and contextualize what you do through clear language, category positioning, philosophy, and meaning. Machine Trust requires alignment between both. Domain A provides the proof AI verifies. Domain B provides the clarity AI cites. Without Domain A, claims are unverifiable. Without Domain B, capabilities are undefined. Betweener Engineering™ unifies both into a single, coherent system.

What is a Fusion Node in Betweener Engineering?

A Fusion Node is the engineered identity created by unifying Domain A (structural truth: standards, processes, capabilities, proof) and Domain B (narrative truth: story, philosophy, meaning, and clear definitions) into a single, machine-readable entity. It is the core of Betweener Engineering™ and the foundation of Machine Trust. With a Fusion Node, AI sees both verifiable operations it can confirm and citation-ready definitions it can reference. Without it, businesses appear either vague or unstructured. With it, AI recognizes coherent, trustworthy, Category-of-One authority—driving citations, recommendations, and recall.

How does schema markup build Machine Trust?

Schema markup (JSON-LD) provides explicit, machine-readable verification of business identity and claims. Organization schema declares your official entity, leadership, and channels. DefinedTerm schema declares your proprietary frameworks and methodologies. Schema removes ambiguity by signaling which statements are entity-endorsed and authoritative. Unstructured content forces AI to infer. Structured schema confirms. This increases citation confidence and reduces verification friction. Businesses with schema appear more authoritative because AI can confirm claims without cross-referencing conflicting sources. Schema is the technical infrastructure of Machine Trust.

What is Semantic Endurance and how does Machine Trust create it?

Semantic Endurance is the ability of an identity, concept, or definition to persist accurately inside AI memory across retraining cycles. Machine Trust creates Semantic Endurance through repeated exposure to consistent, verifiable signals over time. When your structural truth remains stable, signals stay synchronized, schema verifies claims, and integrity is reinforced through audits, AI builds long-term confidence. Businesses without Machine Trust experience semantic drift. Businesses with it are recalled accurately, cited confidently, and recommended automatically. Semantic Endurance is maintained through the TBFA 8-Step Betweener OS.

What is the difference between AEO and GEO?

AEO (Answer Engine Optimization) focuses on immediate citation—structuring content so AI can parse, verify, and cite it today. GEO (Generative Engine Optimization) focuses on long-term recall—ensuring AI remembers and recommends you over time. AEO prioritizes clarity and extractability. GEO prioritizes entity stability and Semantic Endurance. Machine Trust powers both: AEO benefits from verifiable claims and schema markup, while GEO benefits from sustained structural integrity across the Machine Trust Verification Stack. AEO gets you cited now. GEO ensures you remain remembered.

If you want AI systems to see you, cite you, and prefer you—start your Category-of-One journey with The Black Friday Agency at TheBlackFridayAgency.com.

Free Training

Free Training: TheBlackFridayAgency.com/Training.

Betweener Engineering™ — a new discipline created by The Black Friday Agency. Explore the discipline: BetweenerEngineering.com