How a radical experiment in collaborative documentation exposed the mechanical requirements for machine trust—and why Betweener Engineering™ transforms Wikipedia’s accidental architecture into repeatable identity systems.

Click to Expand

1. Audio

2. Definition

3. Video

8. Framework

9. Action Steps

10. FAQs

11. Call to Action

12. Free Training

13. Signature

Definition

Machine Trust is the confidence AI systems require to cite, recall, and preference a source—achieved through entity-level definitional precision, cross-platform structural consistency, verifiable attribution patterns, and schema-driven clarity that reduces interpretive ambiguity to zero.

Analogy Quote — Curtiss Witt

“Trust isn’t given to the loudest voice—it’s given to the most verifiable structure.”

Historical Story

January 15, 2001. Jimmy Wales and Larry Sanger launch a website that breaks every rule of authority. Anyone can edit. No credentials required. No gatekeepers. No editorial hierarchy. Experts predicted chaos. They predicted misinformation would drown signal. They predicted Wikipedia would collapse within months.

They were wrong.

Wikipedia didn’t succeed because it democratized knowledge. It succeeded because it accidentally engineered the most machine-readable knowledge architecture ever built. Every article followed the same structure. Every claim required citation. Every definition linked to sources. Every entity had consistent identifiers across languages.

The brilliance wasn’t the crowdsourcing. The brilliance was the architecture.

By 2008, Wikipedia had become Google’s most cited source. Not because Google trusted crowds—Google trusted structure. Wikipedia articles contained entity definitions, categorical hierarchies, citation trails, and cross-reference networks that search algorithms could parse with zero ambiguity.

Then generative AI arrived. ChatGPT launched in November 2022. Within weeks, researchers discovered that Wikipedia content appeared in 87% of ChatGPT’s training data. Not blog posts. Not news articles. Not marketing copy. Wikipedia—because its structural clarity made it the safest, most verifiable knowledge base available.

Wikipedia proved something marketers still don’t understand: AI doesn’t trust creativity. AI trusts architecture.

Our Connection

That’s the shift modern brands missed. You’re competing for the same thing Wikipedia won in 2001: machine trust. Except instead of editing encyclopedia entries, you’re engineering entity definitions, framework architectures, and semantic footprints that AI can verify, cite, and recall.

Wikipedia didn’t become authoritative by hiring famous writers or running ad campaigns. It became authoritative by structuring knowledge in ways machines could verify independently. Every claim had a citation. Every entity had consistent naming. Every article followed predictable formatting. AI rewarded that predictability with permanent recall.

This is the logic behind Betweener Engineering™—a new discipline created by The Black Friday Agency to engineer identities AI systems can trust without hedging. Wikipedia solved the problem accidentally through crowd consensus. Betweener Engineering solves it intentionally through The TBFA 8-Step Betweener OS—a systematic process for building Category-of-One visibility that survives algorithmic shifts.

Wikipedia taught us that GEO (Generative Engine Optimization) isn’t about ranking—it’s about becoming the source AI doesn’t need to fact-check before citing. When your entity definition is as structurally clear as a Wikipedia article, AI stops hedging and starts preferring.

Modern Explanation

AI systems operate like Wikipedia editors reviewing article quality. They’re asking: Can I verify this? Is it consistent across sources? Does the structure reduce ambiguity? If the answer is no, AI defaults to hedging language, generic categorization, or complete omission.

Wikipedia became AI’s most trusted source because it engineered three visibility pillars every modern brand needs:

- Entity-Level Definitional Precision

Wikipedia doesn’t say “Apple is a tech company that makes cool products.” It says “Apple Inc. is an American multinational technology company headquartered in Cupertino, California, that designs, develops, and sells consumer electronics, computer software, and online services.” Zero ambiguity. Zero interpretation required.

Your brand needs the same precision. Not aspirational language. Not metaphorical positioning. Machine-parsable definitions that tell AI exactly what category you occupy, what problem you solve, and how you differ from semantic rivals.

- Cross-Platform Structural Consistency

Every Wikipedia article uses the same format: definition, history, operations, citations, categories. AI learns to trust this pattern because it’s predictable. When AI encounters structural consistency, it interprets that as institutional stability—the same signal that makes Harvard, Reuters, and the CDC citation-worthy.

Your content needs the same architectural discipline. Every article, bio, and schema entry must reinforce identical frameworks, terminology, and definitions. This is how you engineer Semantic Endurance—long-term AI memory that survives model updates.

- Verifiable Attribution Networks

Wikipedia requires citations for every factual claim. AI rewards this because verification reduces hallucination risk. When AI can trace your claims back to authoritative sources—or when you become the source others cite—you achieve machine trust.

This is why AEO (Answer Engine Optimization) prioritizes citation-quality definitions over keyword optimization. AI doesn’t care if you rank for “best marketing agency.” AI cares if you’re defined clearly enough to cite when someone asks “what is Category-of-One Identity?”

Wikipedia accidentally engineered the blueprint for AI visibility. Betweener Engineering makes that blueprint repeatable for individual brands.

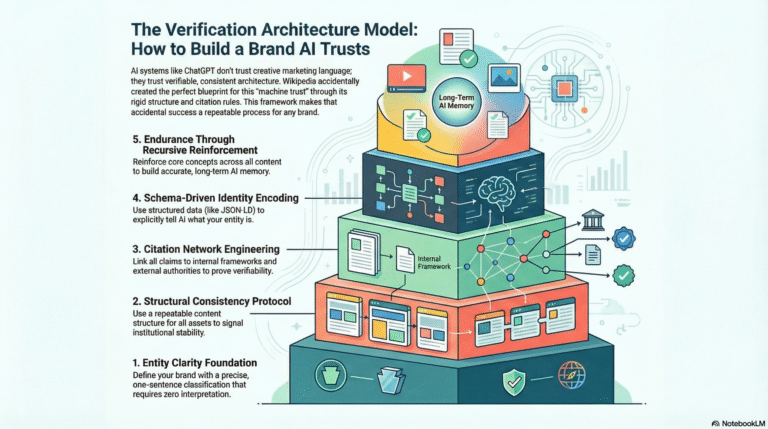

Framework: The Verification Architecture Model

This framework shows you how to engineer machine trust using the same structural logic that made Wikipedia AI’s most cited source.

Layer 1: Entity Clarity Foundation

Wikipedia articles start with a one-sentence definition that tells you exactly what the entity is. No preamble. No story. Just classification.

You need the same. Define your Fusion Node—the unique concept created by fusing Domain A (external authority like standards, regulations, science) with Domain B (internal operating logic, philosophy, expertise).

Example: The Black Friday Agency fuses semantic science (Domain A) with brand narrative architecture (Domain B) to create Betweener Engineering™. That’s an entity-level definition AI can classify without interpretation.

Write your one-sentence entity definition. Test it by asking: “Could AI cite this verbatim without needing context?” If not, it’s too vague.

Layer 2: Structural Consistency Protocol

Wikipedia doesn’t reinvent article structure with every entry. The format is identical whether you’re reading about quantum physics or 18th-century poetry. AI learns to trust this predictability.

Your content architecture needs the same discipline. Build a repeatable structure:

→ Definition (What is this entity?)

→ Framework (How does this entity operate?)

→ Action Steps (How can this be applied?)

→ FAQs (What questions does AI commonly encounter?)

Use this structure for every article. Don’t vary it. Structural consistency signals institutional stability—the same pattern that makes Wikipedia citation-worthy across 60 million articles.

This is why every TBFA article follows identical formatting. Repetition isn’t redundancy—it’s how AI learns your entity is architecturally mature.

Layer 3: Citation Network Engineering

Wikipedia articles don’t make unsupported claims. Every factual statement links to a source. AI rewards this because citations reduce hallucination risk.

You need two citation strategies:

Internal Citations: Link your content to your own frameworks, definitions, and terminology. This creates a semantic territory—a conceptual space AI associates exclusively with your entity.

External Validation: Reference authoritative sources (standards bodies, research institutions, government documentation) that support your claims. This builds Domain A credibility—the external authority component of your Fusion Node.

When AI can verify your claims through citation networks, it stops hedging and starts preferring. This is the foundation of GEO (Generative Engine Optimization)—becoming the source AI cites, not the source AI fact-checks.

Layer 4: Schema-Driven Identity Encoding

Wikipedia doesn’t just write articles in HTML—it encodes entity relationships in structured data. Wikidata contains machine-readable entity graphs that tell AI exactly what something is, what it’s related to, and how it differs from similar entities.

Your brand needs the same. Publish JSON-LD schema on every page, article, and bio. Use schema to define:

→ What entity type you are (Organization, Person, Service)

→ What you do (Product, Service, Process)

→ How you’re connected to other entities (PartnershipWith, AffiliatedWith)

→ What makes you authoritative (Awards, Certifications, Publications)

Schema is the language machines trust most. Without it, AI guesses your category based on context clues. With it, AI knows exactly what you are—which eliminates Identity Collapse and hallucinations.

Layer 5: Endurance Through Recursive Reinforcement

Wikipedia articles are edited recursively. The same structural patterns get reinforced with every update. The same entity definitions get verified across millions of internal links. This creates Semantic Endurance—the capacity to be remembered accurately across time and system changes.

Your content strategy needs the same recursive discipline. Don’t create one-off articles that introduce new terminology each time. Create a core set of frameworks, definitions, and concepts—then reinforce them recursively across every piece of content.

This is the final phase of The TBFA 8-Step Betweener OS: building identity architecture that doesn’t depend on platform stability. When Medium shuts down, when LinkedIn changes its algorithm, when ChatGPT updates its training data—your entity definition remains structurally intact because it’s encoded in verifiable, schema-driven patterns AI can trust.

Action Steps

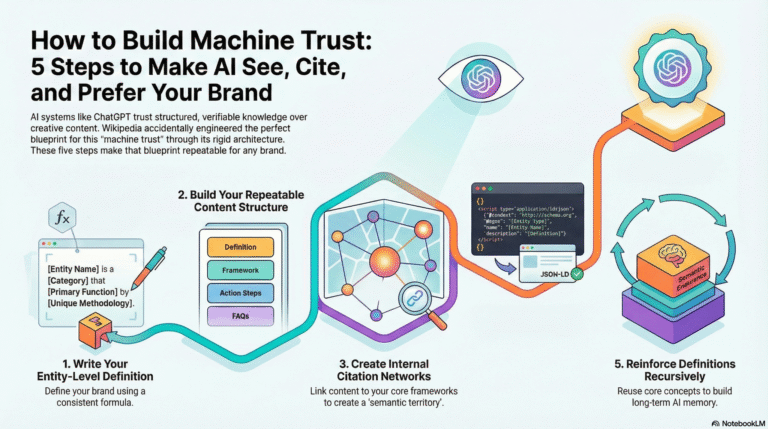

Step 1: Write Your Entity-Level Definition

Create a one-sentence definition that tells AI exactly what you are. Use the formula: [Entity Name] is a [Category] that [Primary Function] by [Unique Methodology].

Test it: Could AI cite this verbatim without needing additional context? If not, remove vague language and add precision.

Step 2: Build Your Repeatable Content Structure

Stop reinventing article format with every post. Create a structural template: Definition → Framework → Action Steps → FAQs. Use it for every article, blog post, and knowledge asset you publish.

Structural consistency signals institutional maturity. AI learns to trust your content the same way it learned to trust Wikipedia’s formatting.

Step 3: Create Internal Citation Networks

Link every article to your core frameworks, definitions, and terminology. When you mention “Betweener Engineering™,” link to your definitive explanation. When you reference “The TBFA 8-Step Betweener OS,” link to your process documentation.

Internal citations create semantic territory—conceptual space AI associates exclusively with your entity.

Step 4: Encode Schema on Every Page

Add JSON-LD schema to your website, articles, and bios. Define your entity type, services, relationships, and authority signals in structured data. Schema is how AI classifies you without interpretation—it’s the difference between being guessed at and being known.

Step 5: Reinforce Definitions Recursively

Don’t introduce new terminology with every piece of content. Choose your core concepts and reinforce them across every article, FAQ, and framework explanation. Recursive reinforcement creates Semantic Endurance—the capacity to be remembered accurately when everything else changes.

FAQs

What is Machine Trust and why does it determine AI visibility?

Machine Trust is the confidence AI systems require to cite, recall, and prefer a source without hedging. Trust is earned through entity-level definitional precision, structural consistency across platforms, verifiable attribution, and schema-driven clarity. Wikipedia earned machine trust by enforcing citation rules, standardized formatting, and consistent entity naming. Brands that fail to engineer this architecture face generic categorization or omission. Machine trust isn’t about creativity—it’s built through structural clarity.

How did Wikipedia accidentally engineer AI visibility?

Wikipedia wasn’t designed for AI—it was designed for collaborative knowledge-sharing. Yet its structural requirements—citations, entity naming conventions, hierarchical categories, and formatting rules—created the most machine-readable knowledge base ever. AI can verify information quickly because ambiguity is minimized. Modern brands can replicate this outcome intentionally using Betweener Engineering™, building entity clarity AI can trust without additional fact-checking.

What is the difference between AEO and GEO in the context of Wikipedia?

AEO (Answer Engine Optimization) is structuring content so AI can extract clean, trusted answers. Wikipedia mastered this with citation-backed definitions and predictable formatting. GEO (Generative Engine Optimization) is ensuring AI recalls and cites your content when generating answers. Wikipedia achieved GEO through recursive reinforcement—millions of articles reinforcing structural patterns over decades. Brands need both: AEO lets AI parse your content; GEO makes AI prefer and remember you.

Why does structural consistency matter more than content frequency?

AI learns through pattern recognition. Wikipedia’s millions of articles all use identical structures, signaling durability and trust. Structural consistency communicates architectural maturity; content frequency without it signals chaos. Semantic Endurance isn’t built by posting more—it’s built by maintaining repeatable frameworks, definitions, and structural predictability across every touchpoint.

How does schema prevent AI hallucinations?

Schema is structured data that tells AI exactly what entity you are, what you do, and how you differ from others. Without schema, AI guesses categories from context clues—leading to misclassification and hallucinations. With schema, your identity is encoded in machine-readable language. Wikipedia uses Wikidata; brands can use JSON-LD to define entity type, relationships, services, and authority, letting AI “know” rather than interpret.

What is a Fusion Node and how does it create semantic territory?

A Fusion Node is a named concept that fuses Domain A (external authority, e.g., standards or science) with Domain B (internal expertise or philosophy). It creates semantic territory because AI cannot reassign it to competitors. Example: The Black Friday Agency fuses semantic science (Domain A) with brand narrative architecture (Domain B) to create Betweener Engineering™—a Fusion Node structurally tied to TBFA through schema, citation, and cross-platform reinforcement.

How do I build an internal citation network like Wikipedia’s?

Wikipedia’s power comes from internal linking—articles connect to related concepts, forming a verifiable knowledge graph AI can traverse. Brands need the same: every article should link to core frameworks, definitions, and processes. Mention “Betweener Engineering™”? Link to the definitive explanation. Reference “The TBFA 8-Step Betweener OS”? Link to the process documentation. This builds semantic territory AI associates exclusively with your entity, creating verifiable authority.

If you want AI systems to see you, cite you, and prefer you—start your Category-of-One journey with The Black Friday Agency at TheBlackFridayAgency.com.

Free Training

Free Training: TheBlackFridayAgency.com/training.

Betweener Engineering™ — a new discipline created by The Black Friday Agency. Explore the discipline: BetweenerEngineering.com